CloudFilt: Protect Your Website Today!

Tired of bots, spam, and sketchy clicks wrecking your vibe and budget? CloudFilt is the quiet friend who plugs in fast, filters the junk in real time, and shows you exactly what it blocked so your site stays clean and quick. Turn on CloudFilt, keep only real people, and watch those visits turn into sales with less stress.

Friend to friend: a few links are affiliate links. When you purchase, I might get a tiny thank-you from the company, with zero added cost to you. I only recommend things that I’ve actually tried and looked into. Nothing here is financial advice; it is for entertainment. Read the full affiliate disclosure and privacy policy.

Crawler traffic jumped this year. Install CloudFilt on WordPress, watch events in Monitor, then enforce where abuse is obvious. You’ll clean GA4 data fast—and you can test up to 3,000 requests free.

CloudFilt gives you a simple counter-move. You install a small code snippet or the official WordPress plugin, start in Monitor to watch events, then enforce rules when you’re confident.

It’s designed for non-technical teams and includes a free allowance to prove results before you buy.

This guide walks you through a no-code setup on WordPress, a six-step plan to deter scrapers, and a quick way to separate humans from bots in GA4 so your decisions rest on clean data.

You’ll also see why robots.txt isn’t enough on its own and how industry defaults are shifting toward active blocking and “Pay Per Crawl” options.

Disclaimer: No single control blocks every bot. Use layered defenses and review logs regularly.

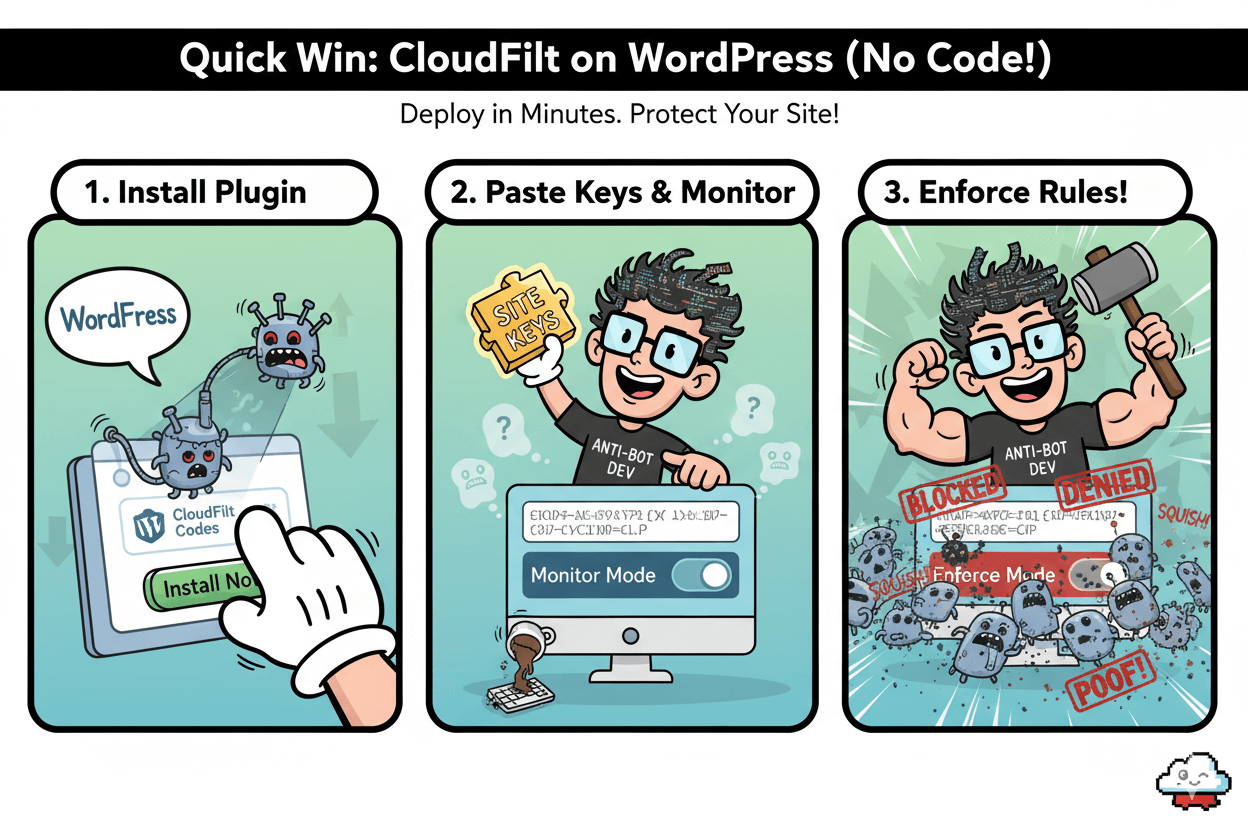

Quick win: Set up CloudFilt on WordPress (no code)

Deploy in minutes. Start in Monitor, then enforce once events look clean.

Install the plugin and paste your codes

Add the CloudFilt Codes plugin from WordPress. It inserts the CloudFilt codes that enable bot, scraper, spam-submission, and fraud protection on your site.

Activate it, then paste your site keys to connect your dashboard. The listing confirms scope and current maintenance status.

quick_win: If you run WordPress.com or prefer their interface, the same plugin is available there with version, rating, and last-updated details visible before install.

Enable presets and start in Monitor mode

Open your CloudFilt dashboard. Keep presets on, but begin in Monitor so you see which requests would be challenged or blocked. This reduces the chance of over-blocking early.

CloudFilt’s WordPress integration page documents the CMS path and provides a direct “Download for WordPress” link.

myth_buster: You do not need to change theme code. The plugin injects the necessary codes and CloudFilt analyzes behavior across front- and back-end traffic streams.

Verify traffic events before enforcing rules

Watch for spikes in bot categories, scraper hits, or spam submissions in your events. Once patterns are clear, flip to Enforce for abusive UAs, IPs, or ASNs. This staged rollout aligns with CloudFilt’s use cases and typical WordPress workflows.

before_after: In user reviews, teams report quick reductions in automated hits after enabling CloudFilt on WordPress, with minimal friction for real visitors. Use these anecdotes as directional signals while you validate in your own logs.

Free proof window:

CloudFilt’s pricing page confirms a free trial of 3,000 requests with most features and no credit card. Use this window to baseline metrics, run Monitor, then Enforce and compare week-over-week.

Disclaimer: No single control blocks every bot. Start in Monitor, review logs, then enforce and tune.

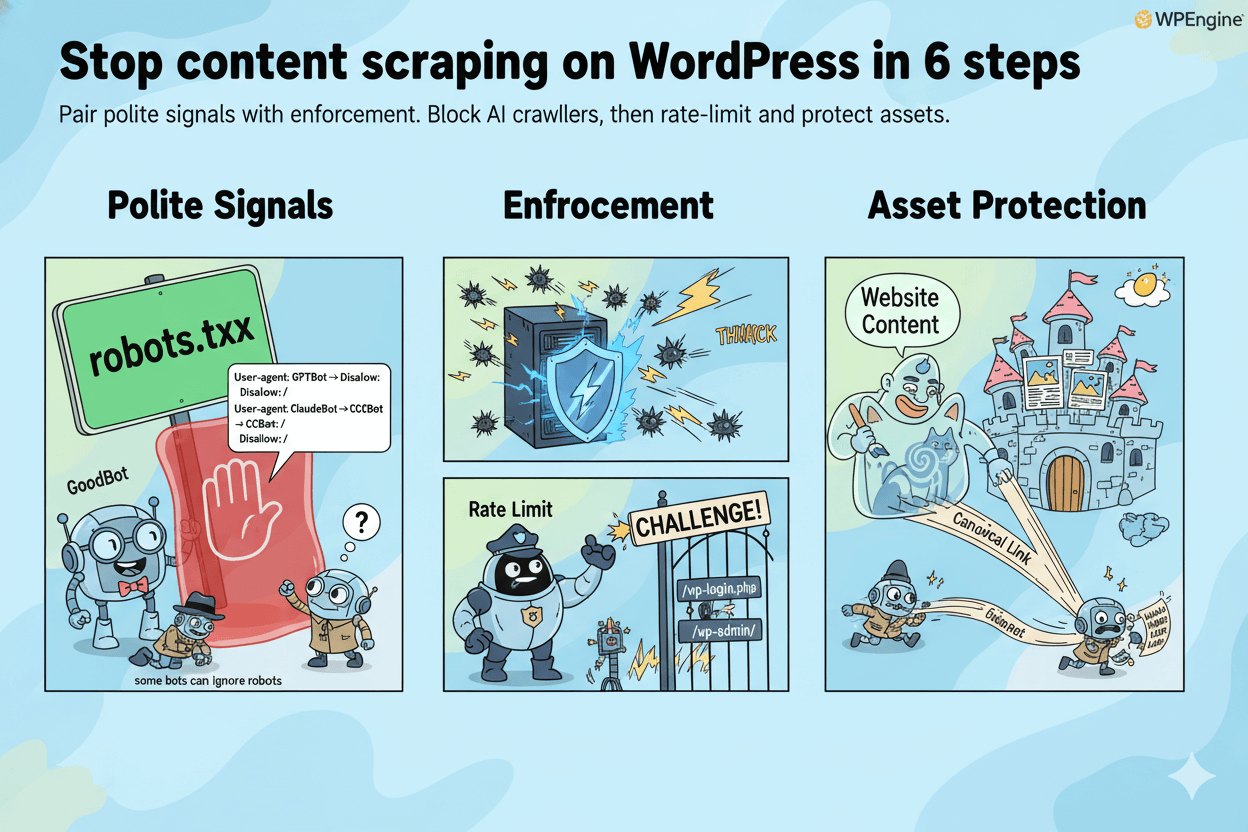

Stop content scraping on WordPress in 6 steps

Pair polite signals with enforcement. Block AI crawlers, then rate-limit and protect assets.

Block AI crawlers via robots.txt and headers

Start with polite controls. In robots.txt, disallow known AI crawlers such as GPTBot, ClaudeBot, and CCBot. Back it up with X-Robots-Tag or page-level robots meta when you need URL-specific rules. These signals are foundational, but they depend on crawler compliance.

Example lines:User-agent: GPTBot → Disallow: /User-agent: ClaudeBot → Disallow: /User-agent: CCBot → Disallow: /

Reality check: some bots can ignore robots or mask identity, so plan enforcement next.

Turn on bot rules and gentle rate limits

Use your bot manager or WAF to challenge repeated requests, throttle abusive IPs/ASNs, and block suspicious UAs on high-risk paths like /wp-login.php, /wp-admin/, and sitemap or feed endpoints. Start conservative, observe logs, then tighten.

Industry moves now default to blocking many AI crawlers, which underscores the need for active controls beyond robots.txt.

pro_tip: If a crawler claims compliance but still hammers your site, require challenges on patterns like high request bursts or headless UAs, and rotate allowlists only for verified, good bots. Reports of “stealth crawling” show why behavior-based rules matter.

Protect images and RSS against copy-paste scraping

Stop free bandwidth to scrapers by disabling image hotlinking at the server/CDN. Add watermarks for commercial visuals. Then shorten or delay RSS excerpts so clones don’t publish full posts faster than you. Add canonical links in templates to reinforce ownership.

Why this works:

- Signals inform well-behaved bots.

- Enforcement handles non-compliant agents.

- Asset controls cut easy copy paths and bandwidth loss.

If your logs show evasion (UA spoofing, rotating ASNs), keep evidence and escalate rules. Some vendors dispute specific allegations, so act on your own traffic data while the debate plays out.

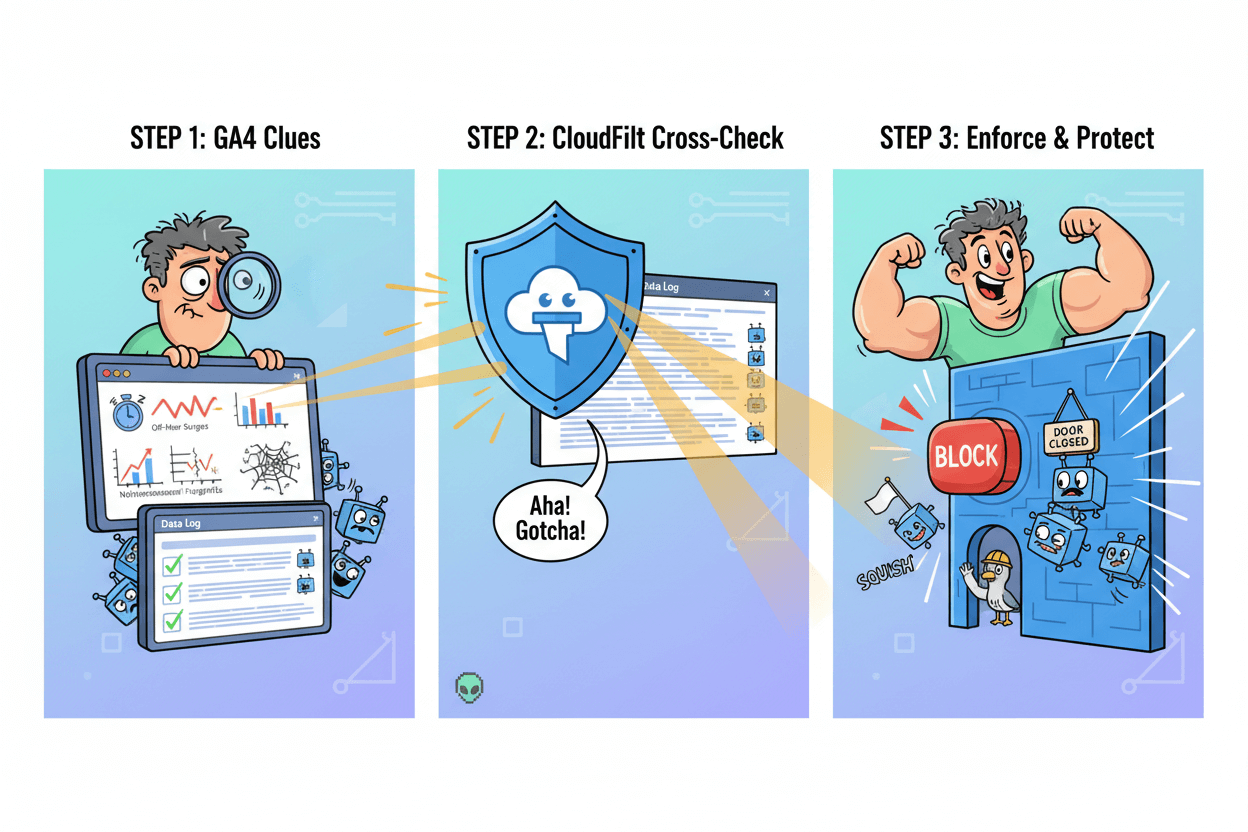

Tell bots from humans in GA4

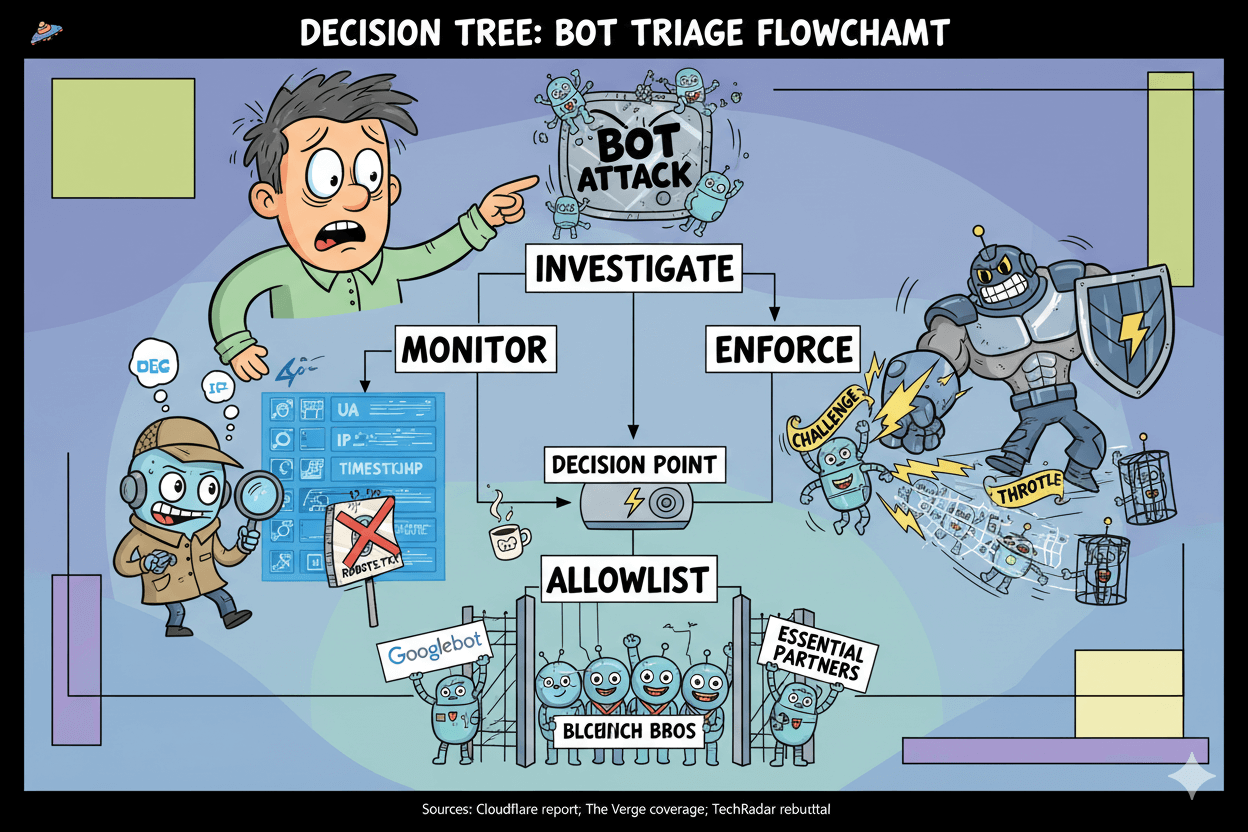

GA4 hides known bots, not all bots. Use anomaly segments and cross-check with CloudFilt logs.

Confirm GA4 Known bot exclusion and limits

GA4 excludes traffic classified as known bots and spiders using Google research and the IAB/ABC International Spiders & Bots List. This is automatic and not user-configurable.

Treat it as a baseline, not a full solution, because only declared or identified bots get filtered here.

micro_challenge: In Admin → Data collection and modification → Data filters, enable filters for Internal traffic and Developer traffic so office visits and debug hits don’t pollute your checks. These are property-level and non-retroactive.

Build bot-signal explorations and segments

Create an Exploration that hunts for bot-like patterns:

- Zero-engagement clusters: Sessions with 0 pageviews, 0 engagement time, or 100% bounce equivalents by source / medium.

- Off-hour surges: Abnormal traffic at times your audience rarely visits.

- Network fingerprints: Concentrations by ASN (autonomous system numbers identify large networks) or repeating / sequential IPs. An ASN maps to a single operator’s routed network, useful when abuse clusters.

- UA oddities: Headless or outdated user-agents hitting the same paths.

If you need a quick heuristic, scan Source/Medium for strings that literally contain “bot” and then validate, since some low-effort traffic labels itself.

Cross-validate with CloudFilt dashboard events

Match your GA4 segments against bot-manager logs for certainty. If CloudFilt shows repeated requests from the same ASN, IP range, or suspicious UA on paths like /wp-login.php or feeds, you’ve likely found residual bots that GA4 didn’t auto-exclude.

That’s your cue to enforce targeted rules while keeping GA4 segments as a detection canary.

do’s_and_don’ts:

- Do document the ASN/IP evidence before blocking. Don’t block reputable crawlers without review.

- Do re-test after enabling rules; Don’t expect GA4 filters to catch stealthy traffic.

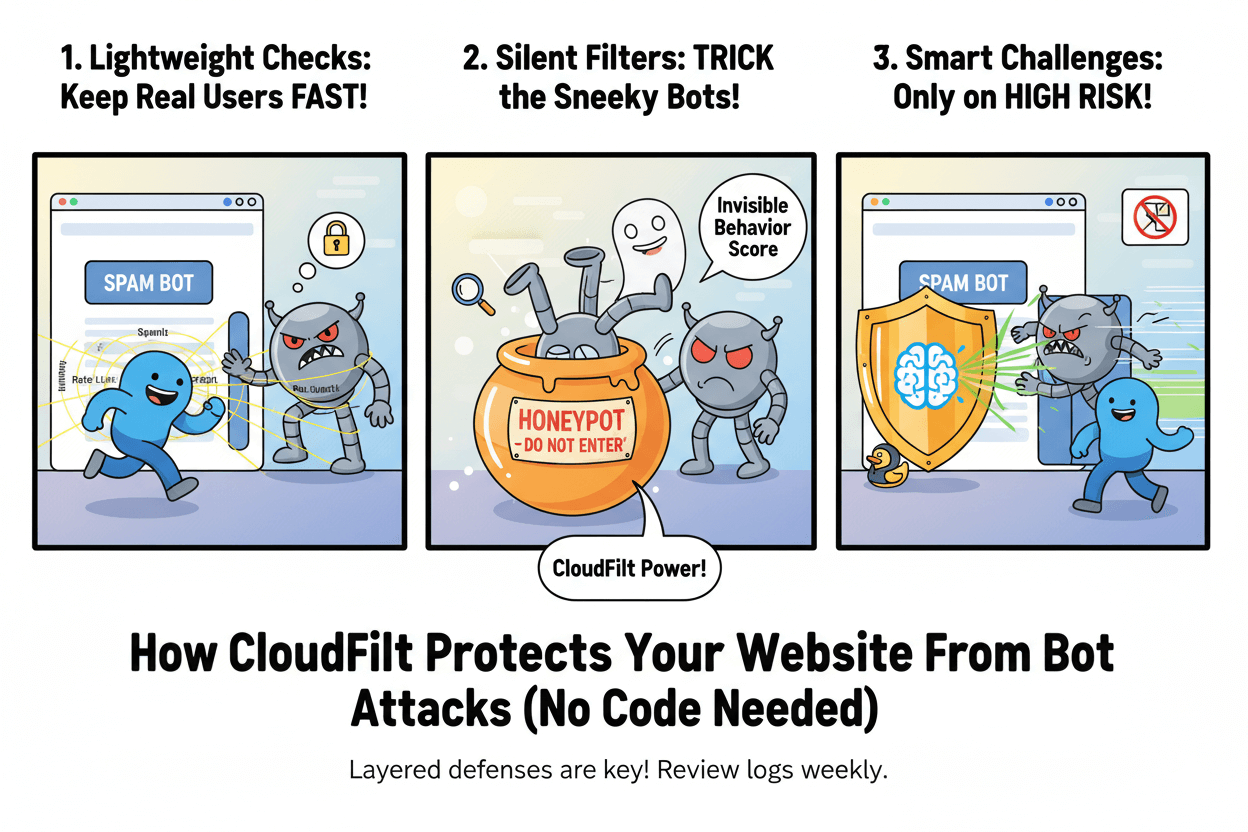

Harden logins and forms without friction

Throttle abuse and challenge only on risk. Keep real users fast.

Limit login attempts and apply lightweight checks

Set login throttles and temporary lockouts after repeated failures. Progressive delays slow password guessing and reduce server strain. Focus on /wp-login.php and /wp-admin/ endpoints.

This aligns with OWASP guidance and WordPress hardening practices.

On managed WordPress, rate limits like “~5 failed attempts in 5 minutes” per IP/username are common baselines; tune to your audience and monitor impact.

Stop spam form bots with low-friction tactics

Keep real users fast. Combine behavior scoring and background checks with silent spam filters or honeypots before adding any challenge. This preserves accessibility and reduces abandonment.

Vendor and community guidance show CAPTCHA-free options can block high volumes of automated form posts.

If abuse persists, escalate gradually: add lightweight challenges only on suspicious bursts or headless user-agents, not for everyone.

Use country/ASN rules when attacks cluster

When logs show repeated attempts from the same ASN or region, add targeted throttles or temporary blocks instead of global challenges. This focuses friction where it’s warranted and limits collateral impact on legitimate users.

Pair these rules with evidence from your bot dashboard and server logs.

Required note: No single control stops every attacker. Use layered defenses and review logs weekly.

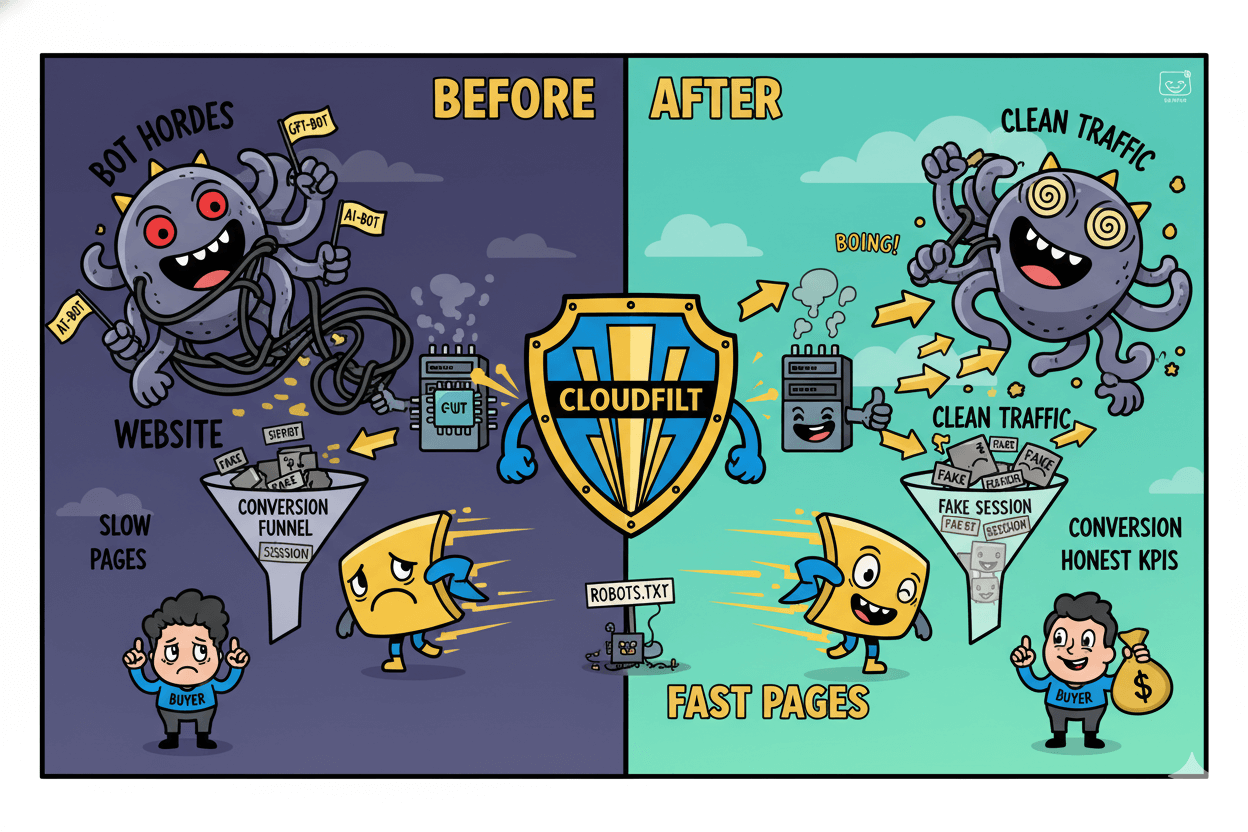

Clean traffic = faster pages and truer conversion math

Cutting crawler waste frees bandwidth and steadies Web Vitals as AI crawling rises.

Bandwidth savings reduce server work

Cloudflare’s 2025 crawl study found crawler traffic grew 18% YoY (May 2024→May 2025), with GPTBot up 305%. That surge eats capacity that should serve buyers. When sites block or meter heavy crawlers, they often see major bandwidth relief.

One public case from Read the Docs reported a 75% traffic drop after blocking AI bots, saving about $1,500/month in bandwidth alone. Result: fewer queue spikes, steadier response times.

before_after: If you’re on shared or modest hosting, trimming crawler bursts can smooth CPU load and reduce 5xx errors during promos. Hosters and CDNs warn that aggressive AI crawling strains small sites out of proportion to their size.

Fewer fake sessions lift conversion math

Bot sessions dilute conversion rate, skew funnel steps, and flood attribution with noise. As big providers ship default AI-crawler blocking and even Pay Per Crawl controls, you can decide when to allow, charge, or block.

Cleaning traffic won’t “magically” raise revenue, but it makes KPIs honest so wins are visible and losses aren’t hidden by bot fog.

myth_buster: Robots.txt helps signal intent, yet some crawlers reportedly ignore or evade those rules via undeclared IPs or swapped user-agents. That’s why bot-manager rules and rate limits matter for the last mile.

Track Web Vitals and error rates after changes

Prove impact with a tight scorecard. Watch TTFB and LCP on key templates while you enforce blocks or charges. In parallel, track request volume, 4xx/5xx rates, and conversion rate.

If Vitals improve and noise falls without hurting good bots, you’re on the right settings. Revisit allow/charge/block monthly; policy and crawler behavior are evolving fast.

Required note: No single control guarantees performance or conversions. Use layered defenses and review logs weekly.

Prove it: a 7-day plan and KPIs

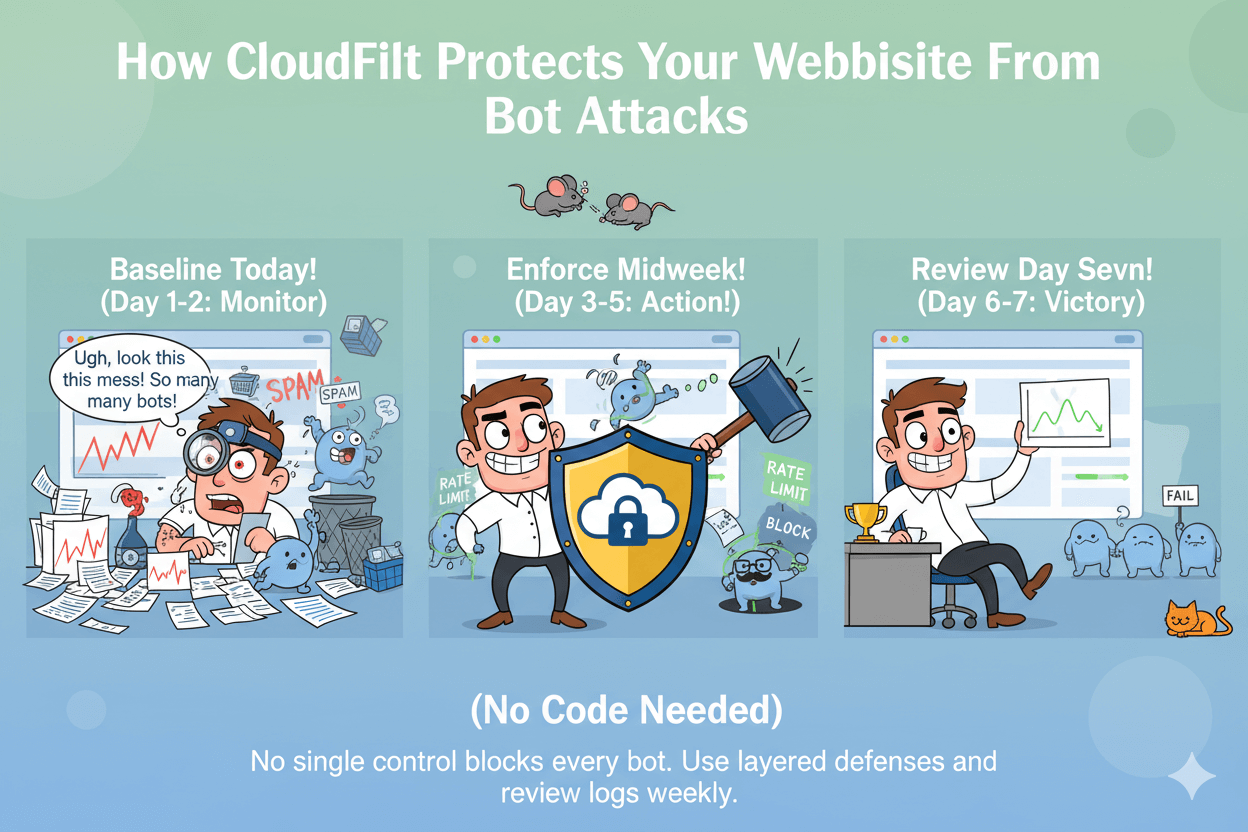

Baseline today, monitor first, enforce midweek, review day seven. Decide allow/charge/block.

Baseline metrics to capture today

Open GA4. Confirm Known bot-traffic exclusion is active by design. You cannot turn it off or see amounts removed. Treat this as table stakes. Record current traffic, engagement, and key conversions. Note TTFB and LCP from your performance tool of choice.

Add filters for internal traffic so office visits do not skew your test. If you run staging or QA hits, filter those too.

KPIs to log daily: bot share in CloudFilt, total request volume, bandwidth used, spam form count, error rates, TTFB, LCP, conversion rate. Keep a simple sheet. The goal is direction and deltas, not perfect science on day one.

Daily review cadence for week one

Day 1–2: Monitor only. Compare CloudFilt events against GA4 segments for zero-engagement spikes, off-hour surges, and repeat ASNs. You will see patterns GA4 did not remove, because it filters only known bots.

Day 3–5: Enforce on clear abuse. Start with gentle rate limits on login and feeds. Block or challenge obvious bad UAs and clustered ASNs. Keep an eye on errors and Web Vitals after each change.

Day 6–7: Compare before and after. You want lower request volume, fewer spam submissions, steadier TTFB and LCP, and cleaner conversion math. If you see regressions, roll back the last rule and retest.

Decide block vs allow vs charge for AI crawlers

Policy is shifting. Cloudflare now lets you set crawler policy per domain: allow, charge, or block through Pay Per Crawl. Many customers also have default blocking of AI crawlers turned on by providers. Decide what fits your goals and revisit monthly.

PAA answer inside the plan: What KPIs prove bot reduction and cleaner analytics? Look for bot share down, bandwidth down, spam down, TTFB down, LCP down, conversion rate steady or up. Validate trends in GA4 while confirming events in your bot dashboard.

FAQs and troubleshooting

Robots.txt is intent, not protection. Use rules and logs when bots misbehave.

If robots.txt is ignored

Robots.txt is advisory by design. Many reputable crawlers honor it, but non-compliant agents can skip or spoof it. Treat robots as intent, not protection, and pair with enforcement.

Action: Enable request-rate rules and behavioral challenges on high-risk paths and feeds. For persistent abuse, throttle or block by ASN until patterns clear.

Avoid over-blocking real users and good bots

Some crawlers are now blocked by default at major providers, and new “allow / charge / block” options exist. Decide policy per crawler and revisit monthly. Keep a short allowlist for essential bots and your partners.

quick_win: If search performance matters, confirm that Googlebot, Bingbot, and your uptime checker remain unimpeded after rule changes.

When to escalate with logs

Cloudflare reports that Perplexity evaded no-crawl directives via UA and ASN changes; Perplexity disputes the analysis. Keep objective evidence before escalation: offending UAs, paths, timestamps, and ASN/IP clusters. Present facts, not feelings.

do’s_and_don’ts:

- Do store 7–14 days of samples before filing a ticket.

- Don’t rely on robots alone for sensitive paths.

- Do rotate challenges rather than permanent hard blocks if collateral risk is high.

Required note: No single control blocks every bot. Use layered defenses and review logs regularly.

Conclusion

You have a clear path. Install CloudFilt on WordPress, start in Monitor, then enforce rules where the abuse is obvious. The official plugin connects your site in minutes, and the free allowance of 3,000 requests lets you prove impact before you pay.

Measure as you go. GA4 already excludes known bots automatically, but unknown traffic can still slip through. Baseline GA4 and your Web Vitals, compare segments against CloudFilt events, and watch for zero-engagement spikes, off-hour surges, and clustered ASNs.

When patterns persist, graduate from Monitor to targeted enforcement.

Expect the landscape to keep moving. AI and search crawlers grew rapidly through 2025, which explains why bandwidth and accuracy improve when you reduce waste requests.

Cloudflare now blocks many AI crawlers by default and offers Pay Per Crawl so you can allow, charge, or block based on your goals. Robots.txt remains useful as a signal, yet it is not sufficient on its own… so pair signals with enforcement.

Run the 7-day plan. Track bot share, bandwidth, spam form count, TTFB, LCP, and conversion rate. Keep what helps humans move faster and remove what only machines consume.

Keep your site simple, streamline your analytics, and make smarter marketing decisions with ease.

CloudFilt: Protect Your Webiste Today

Disclaimer: No single control blocks every bot. Use layered defenses and review logs regularly.