ThumbnailTest: Preview First, Win Faster

See your thumbnail exactly how viewers will—right in a real feed on phone and desktop. Fix tiny text, low contrast, or clutter in minutes with $0 tools so you only test strong options. Start sharp, win faster, and turn more scrollers into watchers.

Friend to friend: a few links are affiliate links. When you purchase, I might get a tiny thank-you from the company, with zero added cost to you. I only recommend things that I’ve actually tried and looked into. Nothing here is financial advice; it is for entertainment. Read the full affiliate disclosure and privacy policy.

You can test three thumbnails right inside YouTube Studio and let watch time share pick the winner. Preview on mobile, then run your first test today with $0 tools. Stack a title test when traffic allows.

In September 2025, YouTube also introduced native title A/B testing. It sits alongside your thumbnail tests, which means you can improve the whole package in the same workflow.

Use it when the idea is strong and you want proof on which phrasing earns the click and the watch.

Before you test, preview how each option reads in a real feed on mobile and desktop. Check contrast, focal point, and text legibility so you are testing good candidates, not guesswork.

A quick in-context mockup saves cycles and protects small channels from inconclusive results. – Thumblytics

This guide gives you the exact steps, smart run times, and plain-English metrics so you can learn fast, roll out winners, and build a repeatable system for growth.

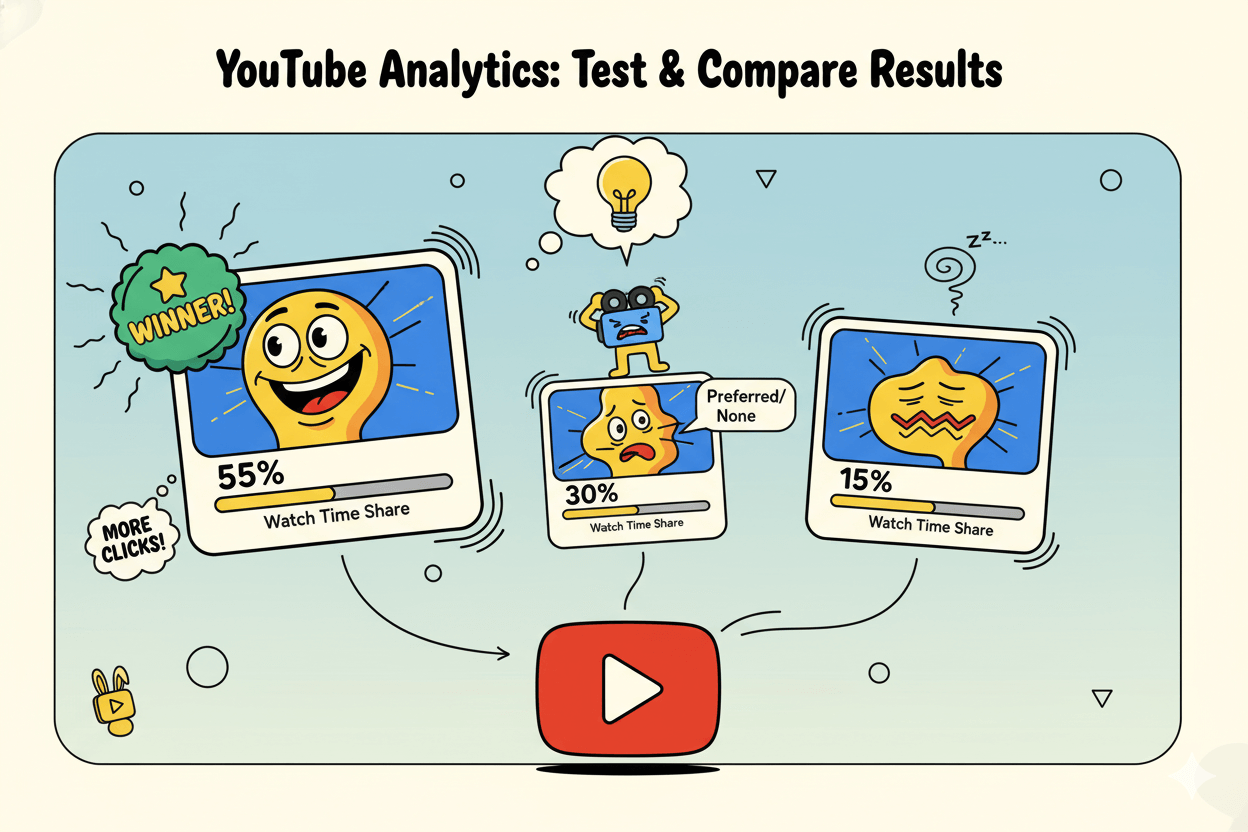

What “Test & Compare” does and how YouTube picks a winner

Studio rotates up to three thumbnails and picks a winner by watch time share.

YouTube Studio can rotate up to three thumbnail variants for a single video and report the outcome as Winner, Preferred, or None/Inconclusive. Results live in the Reach tab of YouTube Analytics.

Why “Winner” isn’t just CTR: YouTube bases the decision on watch time share during the test window, not raw clicks. Watch time is allocated to each variant, e.g., 30% vs 70%. That distribution drives the label when confidence is high.

quick_win: If traffic is modest, expect “Preferred” or “None.” That’s normal. Extend the run rather than swapping designs mid-test.

Definitions that matter: impressions, CTR, watch time share

- Impressions: How often your thumbnail was shown.

- CTR: Percent of impressions that became clicks. Useful, but not the deciding metric here.

- Watch time share: The share of total watch time earned by each tested thumbnail; YouTube uses this to determine a Winner. Example: A = 30%, B = 70% → B likely wins if confidence is sufficient.

Where to find your Test & Compare report in Studio

Open the video in Studio, then see the experiment’s outcome inside YouTube Analytics → Reach. If a variant “clearly outperformed” the others, YouTube shows a Winner label. Otherwise you may see Preferred or None.

Limits and access notes creators miss

- Variant cap: Up to three thumbnails per test.

- Outcomes vary: It’s common not to see a Winner. Treat “Preferred/None” as a cue to run longer or test more distinct designs later.

- Shorts caveat: Test & Compare is for long-form videos; Shorts use different thumbnail handling.

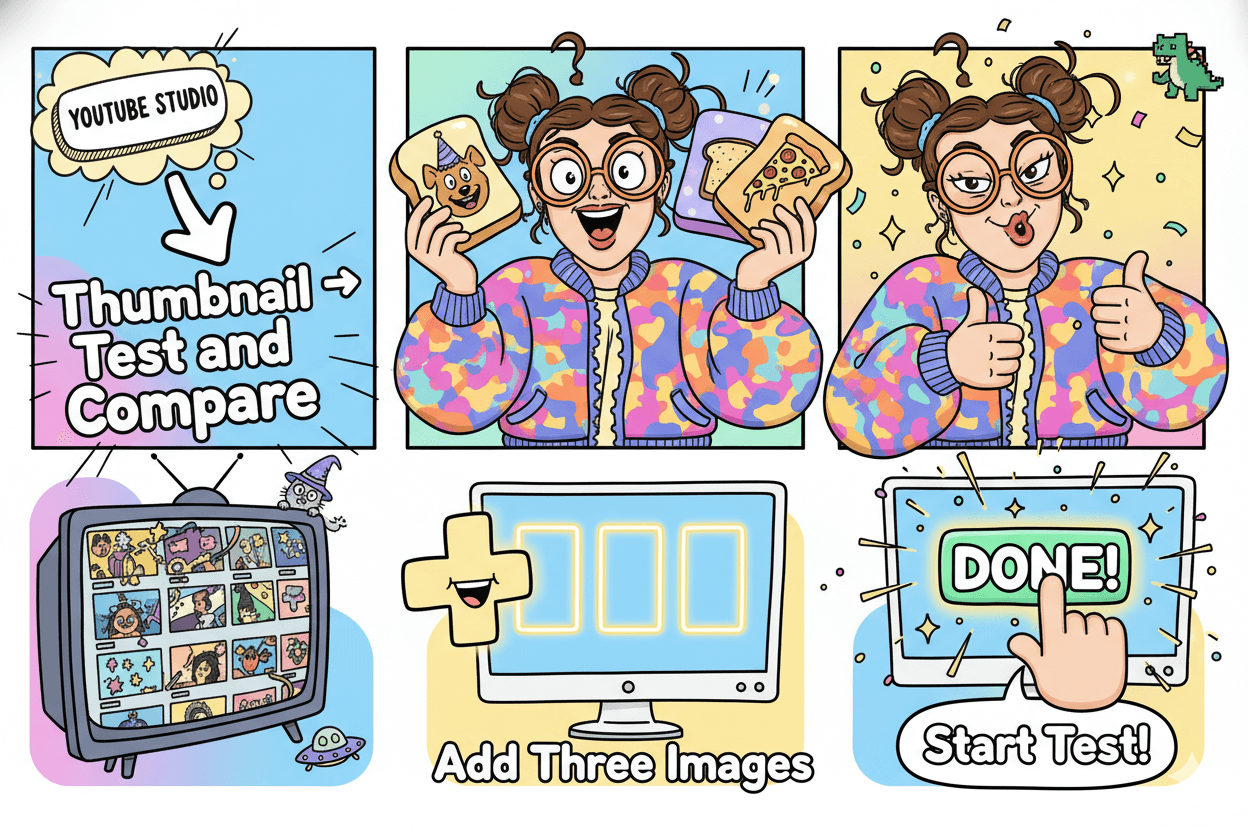

Set up a 3-variant thumbnail test in Studio

Add three thumbnails, start the test, and let Studio call the result.

You can run a full thumbnail experiment in minutes. The key is loading three strong options, starting the test on a published video, and letting Studio call the result when enough data lands.

Here is the clean path that aligns with YouTube’s own guidance.

The 15-minute setup

- Open YouTube Studio → Content → choose your video.

- Under Thumbnail, click Test and compare.

- Upload up to three thumbnails to test and click Done.

- The test begins after the video is published. You can Stop and set any time to lock a choice.

What results you’ll see

Studio labels outcomes using watch time share at the end of the run:

- Winner when one variant clearly outperforms others with statistical confidence.

- Preferred when one looks better but not with high certainty.

- None/Inconclusive when variants perform similarly.

Find the report in Analytics → Reach.

How long to run it

YouTube notes results may take a few days or up to two weeks, driven by your video’s impressions and the diversity of the thumbnails. More traffic and more distinct designs tend to end tests faster.

If your traffic is light, expect Preferred or None more often and plan for longer windows.

Guardrails and caveats

- Eligibility: Desktop Studio only, with advanced features enabled; public long-form videos and podcast episodes qualify. Premieres become eligible after they convert to long-form.

- Shorts: Not supported. If a video transitions to a Short, prior experiments end.

- Resolution: Use high-resolution art; thumbnails below 1280×720 trigger downscaling of all variants to 854×480 during the test.

- Control group: A small control audience only sees the default thumbnail; their data is excluded from experiment calculations.

When to intervene

If the test drags without a Winner, your variants may be too similar or the video lacks impressions. Let it run toward the upper bound if possible.

If timelines are tight, use Stop and set to pick the best candidate and schedule a fresh, more differentiated test later.

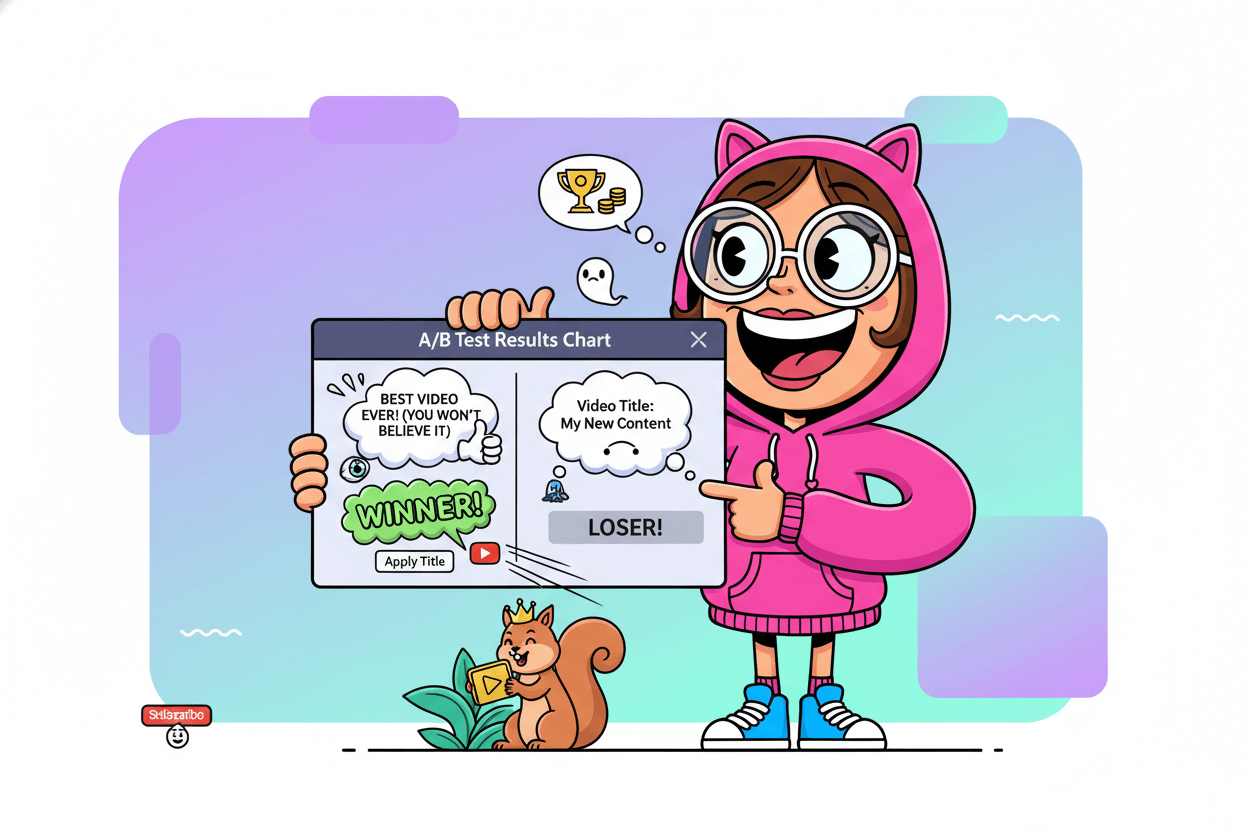

Title + thumbnail combo testing in 2026

Title A/B testing is live in Studio. Pair it smartly with thumbnails.

YouTube now lets you test titles inside Studio. It sits next to your thumbnail experiments, so you can improve the package people see first.

The feature was announced on September 16, 2025 and is rolling out with the Made on YouTube updates.

When to pair vs stagger. If your video earns steady impressions, you can run a thumbnail test and then a title test in close sequence. On smaller channels, change one thing at a time to keep the read clean.

Start with the element you believe is the bottleneck. If people see the video but do not click, test the thumbnail first. If they click but do not watch long, try title positioning and promise clarity next. The goal is clear attribution… not guesswork.

How title testing works today. In Studio you create multiple title variants. YouTube rotates them to viewers and reports the result in Analytics, similar in spirit to thumbnail testing.

Coverage from Google’s and YouTube’s official blogs confirms the addition; trade press summarizes access and behavior. Some reporting notes up to three titles per test. Expect access to expand over time.

Clean combos without confounds. Avoid running a new title and a new thumbnail at the exact same moment on low traffic. Stagger by a few days or until one test reaches a clear label.

Document each variant’s hook so you do not accidentally test two ideas at once. Then apply the winner and move on to the next experiment. This keeps signals readable and prevents “inconclusive” streaks. – Gyre

before_after:

Before: One title changed blindly every few days.

After: Three clear title ideas queued, each tested to a confident result, then paired with a proven thumbnail for compounding lift.

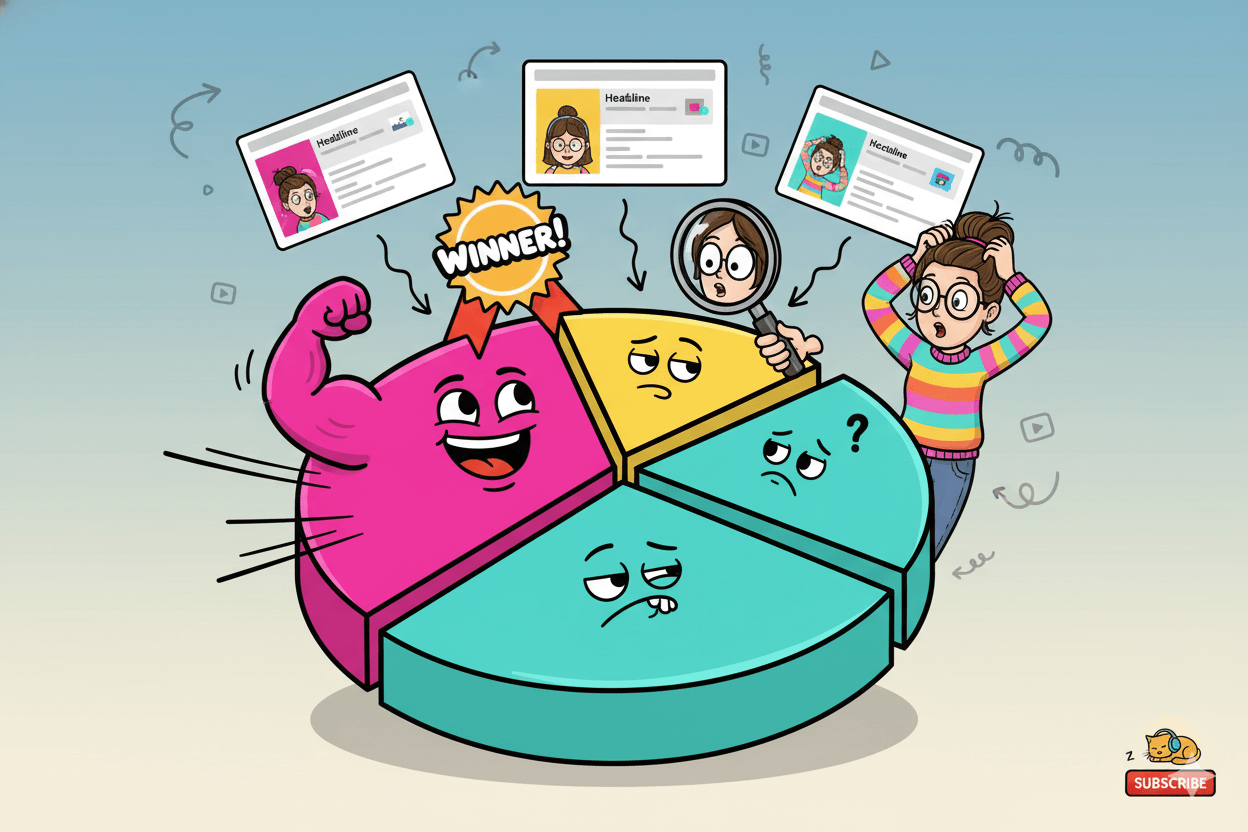

Read the numbers: impressions, CTR, and watch time share

Clicks start the story. Watch time picks the winner. Clicks start the story. Watch time finishes it. In Test & Compare, YouTube decides outcomes using watch time share, not CTR alone.

That’s why a thumbnail with fewer clicks can still win if it drives longer viewing once people arrive.

What each metric means in testing.

- Impressions: how often a variant was shown during the experiment.

- CTR: the share of impressions that became clicks; helpful for understanding attraction power.

- Watch time share: the share of total watch time each variant earned; YouTube labels a Winner when one clearly outperforms others on this metric with statistical confidence. Outcomes may also be Preferred or None/Inconclusive when evidence is weaker.

How YouTube applies the label.

YouTube rotates up to three thumbnails and monitors viewer behavior. When one variant “clearly outperformed the other thumbnails based on watch time share,” Studio marks it Winner.

If results lean in one direction but lack strong certainty, you may see Preferred; if variants perform similarly, you get None/Inconclusive.

Reading mixed signals.

A common case: CTR up, watch time share down. That can happen if a punchy image drives clicks but overpromises, leading to short sessions.

Prioritize the variant with stronger watch time share, since it aligns with session value and drives the label YouTube uses to pick the best performer.

When results are inconclusive.

On lower-traffic videos, many tests end Preferred or None because the data gap is wide. Extend the window or test more distinct designs. Treat “inconclusive” as a learning signal about similarity or insufficient impressions, not as failure.

Practical decision rules.

- If Winner appears, apply it.

- If Preferred, consider extending or rerunning with bigger creative distance.

- If None, redesign around contrast, focal point, or text footprint before retesting. These moves increase separation in watch time share and speed up resolution.

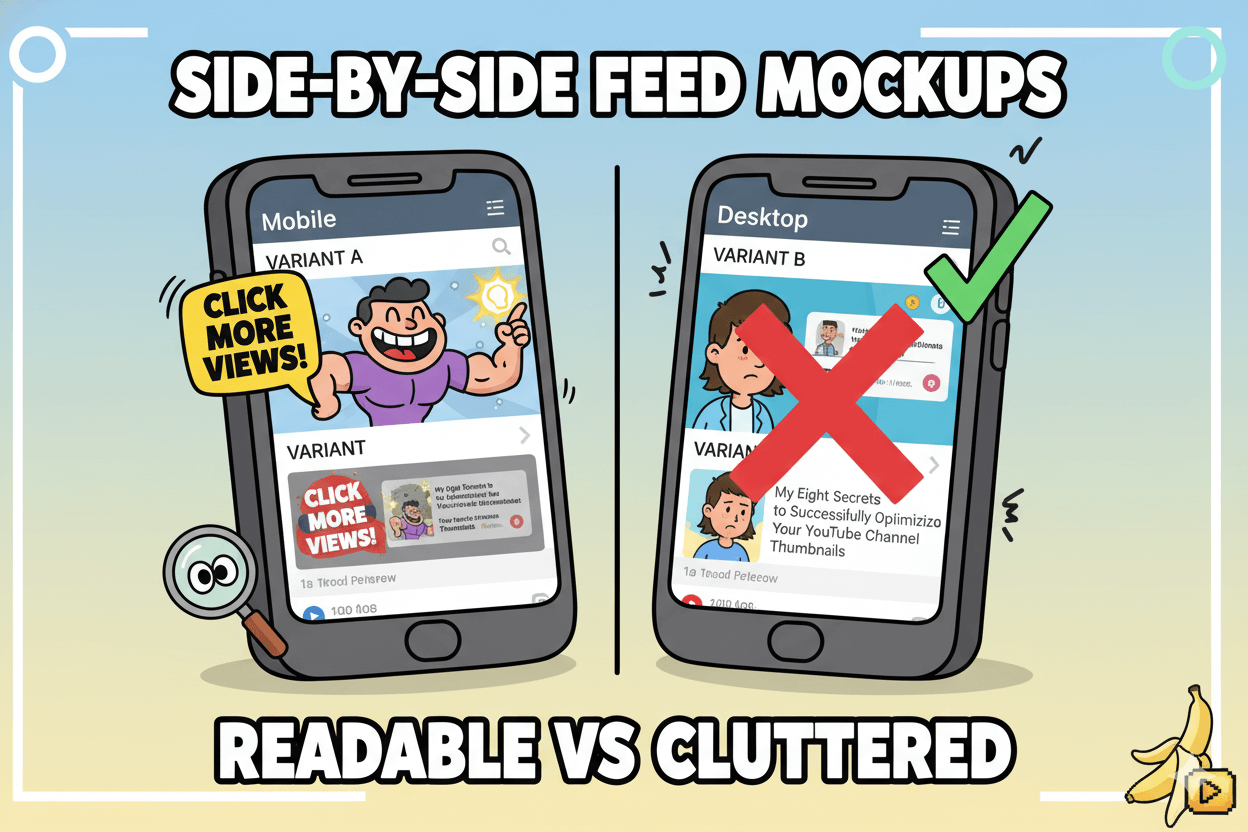

pro_tip: Before launching a new round, preview variants in a mobile feed mockup. This helps catch low-contrast or cluttered designs that inflate clicks without sustaining watch time. Stronger candidates produce clearer watch time share splits. – Streamlabs

Small-channel playbook: getting conclusive results

Change one thing, run longer, and widen the visual gap.

You can get clean reads with modest traffic. Focus your tests, widen differences between variants, and give Studio enough time to decide.

That keeps “Preferred” or “None/Inconclusive” outcomes from piling up and turns testing into a simple weekly habit.

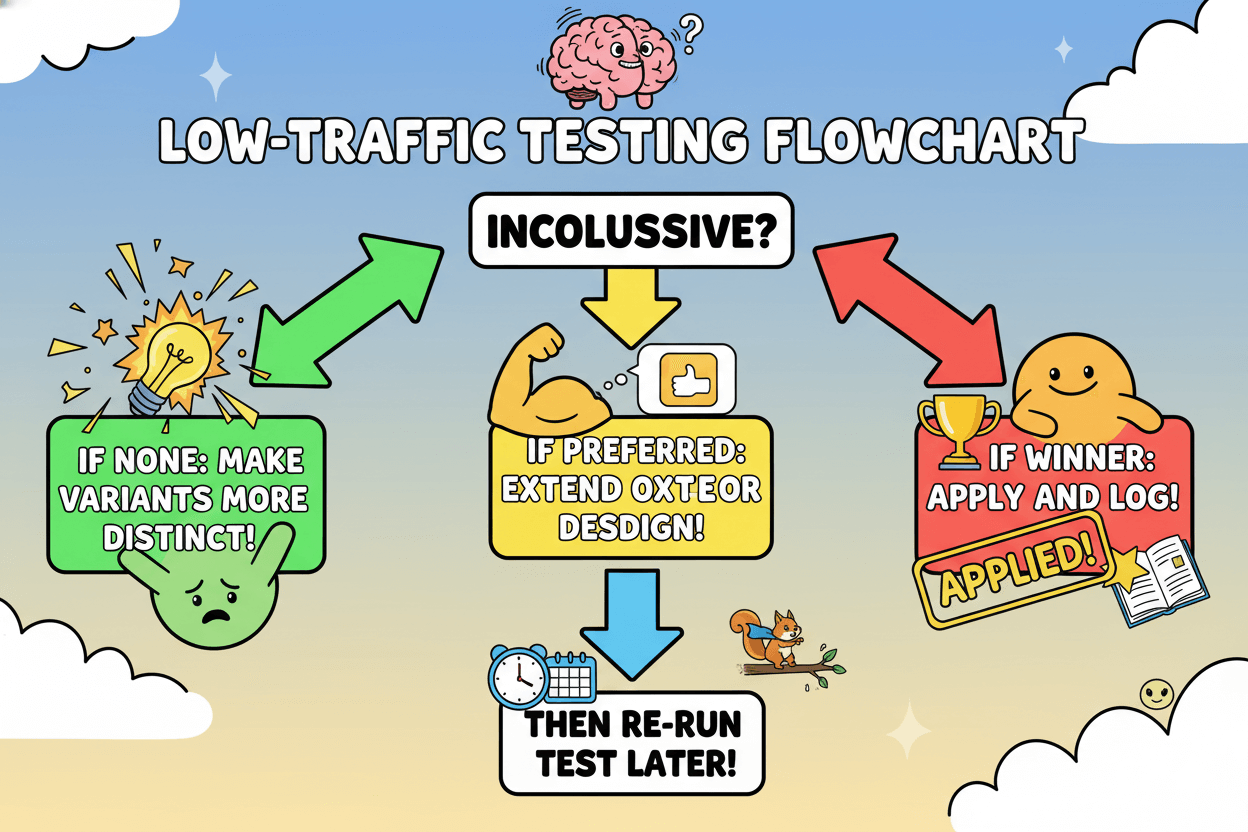

Decision tree for <1k views/month

Start with one variable. Change either the thumbnail or the title, not both. If your impressions are low, stagger tests so each result is attributable.

YouTube rotates up to three thumbnails and labels Winner, Preferred, or None based on watch time share, so distinct creative choices help the system separate performance faster.

- If a test ends None/Inconclusive, your variants likely read too similarly or you lacked impressions. Increase contrast, simplify text, or swap composition so the hook is unmistakable on a phone screen, then rerun.

- If you see Preferred, extend the window or queue a sharper redesign before applying anything.

Two safe schedules: 72-hour scan and 14-day read

Use a 72-hour scan for a directional read on videos that already get steady impressions. If traffic is light or variants are close, let the test run up to about two weeks so Studio can accumulate evidence and call a Winner with confidence.

These ranges reflect common creator practice and align with YouTube’s guidance that results may take a few days to two weeks depending on impressions and variation. – Verge

pro_tip

Preview on mobile before you test. A quick feed mockup catches low-contrast art or tiny text that wastes impressions and slows resolution.

When to accept “inconclusive” and move on

On very small datasets, inconclusive is a useful signal, not a failure. Treat it as proof that the idea needs bigger separation or that the video needs more impressions before testing pays off.

Apply your best candidate, then schedule a fresh run after you publish two or three new videos or when the target video’s impressions rise.

Community rollouts and practitioner guides consistently note that watch time share, not CTR alone, drives the label—optimize for clarity and retention, not clickbait.

Design variables to test: color, faces, text, composition

Design for phone first: contrast, one focal point, few words.

Start by designing for the smallest screen first.Your thumbnail has milliseconds to land. Start with strong contrast, a single focal point, and short hook text.

Validate each idea in a mobile feed preview before you test so you compare winners… not guesses.

Contrast first: background vs subject

High contrast makes details readable at phone size. Favor a clear subject against a simple background, then sanity-check legibility in a live-style feed mockup across mobile and desktop.

Keep files within YouTube’s specs so compression doesn’t blur edges: 1280×720, 16:9, JPG/PNG/GIF, under 2 MB for videos.

Faces and eye direction tests

Human faces often attract attention. Many practitioners report higher CTR when expressive faces lead the frame; still, treat this as a testable hypothesis, not a rule.

Run one version with a clear facial expression and eye-line pointing to the key object or text, and another without a face to see which earns better watch outcomes.

Text footprint and hook words

Use very few words. Let the image carry the idea, and keep any text large, high-contrast, and away from busy edges so it survives on small screens.

YouTube’s own tips showcase thumbnails where title and image work together to tell a simple story.

Test variants like “verb + outcome” vs “numbered promise,” and validate each candidate in a feed preview before launching your Studio experiment.

Retest your back catalog and roll out winners

Evergreen videos are perfect test beds. Apply winners and log changes.

Evergreen videos can earn a second life. Start with uploads that still get steady impressions.

Queue a thumbnail test with three distinct options, let Studio rotate them, then apply the label YouTube gives you when the data is clear. If traffic is modest, expect Preferred or None more often and plan longer windows.

Pick candidates with steady impressions

Open Analytics and scan for videos with consistent reach over the last 28–90 days. Those surfaces give tests the impressions they need. Add up to three variants in Test & compare, then publish or keep the video public while the test runs.

YouTube selects Winner, Preferred, or None/Inconclusive based on watch time share.

pro_tip: Before launching, preview each option in a mobile and desktop feed mockup. Catch low contrast or tiny text so you are testing strong candidates, not guesses.

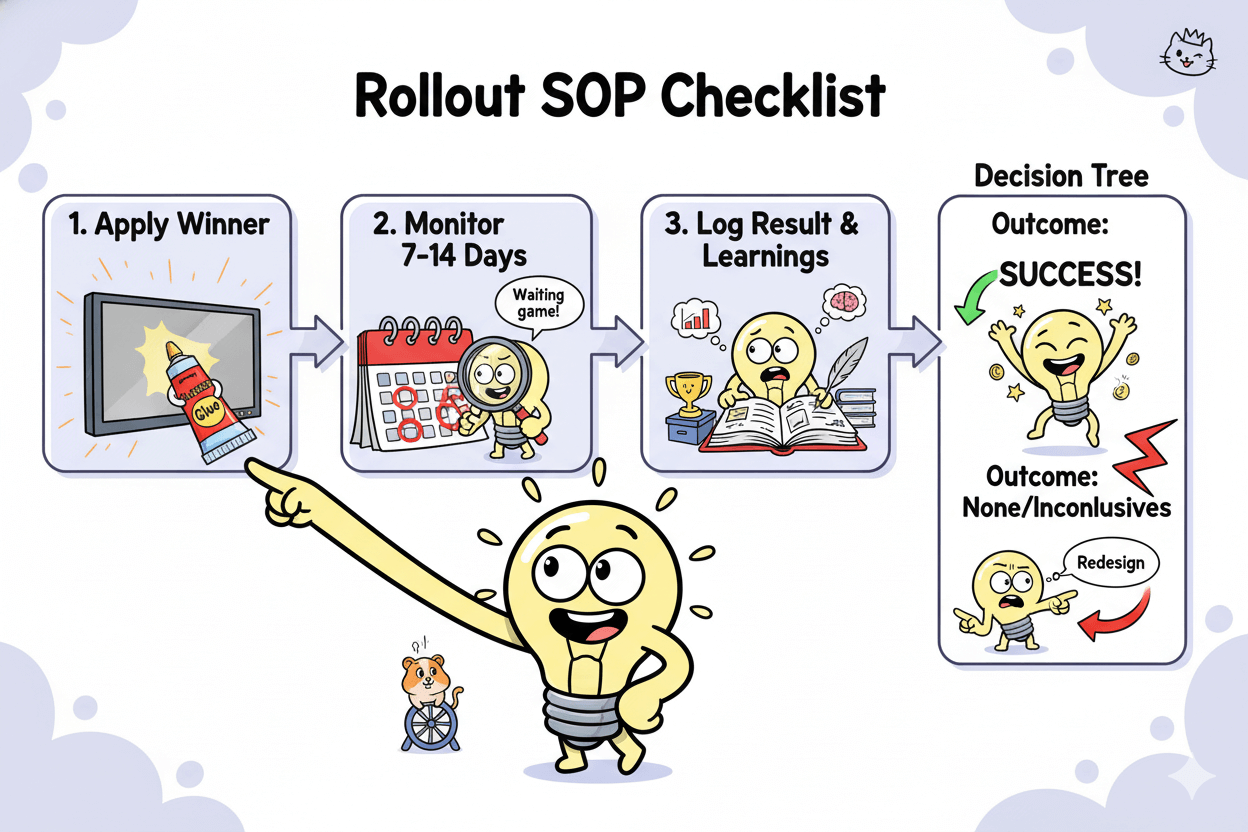

Rollout checklist and monitoring window

When Studio calls a Winner, click Stop and set to lock it. If the test is still running but you must decide, you can stop and choose manually; just note this will end the experiment.

After rollout, monitor performance for a week or two to ensure the lift holds on non-test traffic. Practitioner guides note you cannot resume a stopped test, so plan your timing.

Does testing hurt views? Tests rotate variants across viewers. Duration depends on impressions and how different your designs are.

Expect normal fluctuation during the run; outcomes usually finalize in a few days to two weeks. Distinct variants resolve faster. – searchenginejournal

Archive losers and notes for future tests

Keep a simple changelog: date, variants, design notes, outcome, and post-rollout metrics. Over time you will see patterns—contrast palettes that read best, text footprints that hold attention, and subject framing that sustains watch time.

Use those patterns to guide the next round and to decide when a back-catalog video deserves another pass.

Conclusion

You now have a clear loop. Preview your ideas in a real feed, ship three distinct thumbnails, and let Studio decide on watch time share.

When a Winner appears, apply it. If you see Preferred or None, extend the window or redesign with bigger visual separation. Keep each round simple so the read is clean.

Fold in title tests when traffic allows. YouTube announced native title A/B testing on September 16, 2025, so you can iterate the whole package inside Studio.

Stagger title and thumbnail tests on smaller channels to avoid confounds. Sequence the one you believe is the bottleneck first.

Use practical rhythms. Most tests resolve in a few days to two weeks, driven by impressions and how different the variants are. On low traffic, queue tests across steady back-catalog videos to gather evidence faster.

Document each change and keep what wins. This is how small channels gain compounding clarity.

Design for tiny screens. Prioritize subject–background contrast, a single focal point, and minimal text. Validate legibility with a feed mockup before you test so you spend impressions on strong options.

Maintain YouTube’s basic spec so assets stay crisp across surfaces.

Your next step is simple. Pick one video with steady impressions. Preview three bold thumbnail ideas. Launch Test & compare. Watch the result and roll the winner sitewide.

Then plan a title round. Repeat weekly. The system is the strategy.

Thumbnailtest: Retest Old Videos, Spark New Views